Mosaic AI Vector Search

February 03, 2025

This article gives an overview of Databricks’ vector database solution, Mosaic AI Vector Search, including what it is and how it works.

What is Mosaic AI Vector Search?

Mosaic AI Vector Search is a vector database that is built into the Databricks Data Intelligence Platform and integrated with its governance and productivity tools. A vector database is a database that is optimized to store and retrieve embeddings. Embeddings are mathematical representations of the semantic content of data, typically text or image data. Embeddings are generated by a large language model and are a key component of many GenAI applications that depend on finding documents or images that are similar to each other. Examples are RAG systems, recommender systems, and image and video recognition.

With Mosaic AI Vector Search, you create a vector search index from a Delta table. The index includes embedded data with metadata. You can then query the index using a REST API to identify the most similar vectors and return the associated documents. You can structure the index to automatically sync when the underlying Delta table is updated.

Mosaic AI Vector Search supports the following:

How does Mosaic AI Vector Search work?

Mosaic AI Vector Search uses the Hierarchical Navigable Small World (HNSW) algorithm for its approximate nearest neighbor searches and the L2 distance distance metric to measure embedding vector similarity. If you want to use cosine similarity you need to normalize your datapoint embeddings before feeding them into vector search. When the data points are normalized, the ranking produced by L2 distance is the same as the ranking produces by cosine similarity.

Mosaic AI Vector Search also supports hybrid keyword-similarity search, which combines vector-based embedding search with traditional keyword-based search techniques. This approach matches exact words in the query while also using a vector-based similarity search to capture the semantic relationships and context of the query.

By integrating these two techniques, hybrid keyword-similarity search retrieves documents that contain not only the exact keywords but also those that are conceptually similar, providing more comprehensive and relevant search results. This method is particularly useful in RAG applications where source data has unique keywords such as SKUs or identifiers that are not well suited to pure similarity search.

For details about the API, see the Python SDK reference and Query a vector search endpoint.

Similarity search calculation

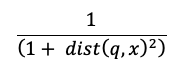

The similarity search calculation uses the following formula:

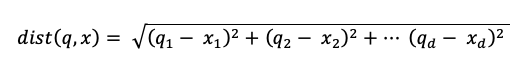

where dist is the Euclidean distance between the query q and the index entry x:

Keyword search algorithm

Relevance scores are calculated using Okapi BM25. All text or string columns are searched, including the source text embedding and metadata columns in text or string format. The tokenization function splits at word boundaries, removes punctuation, and converts all text to lowercase.

How similarity search and keyword search are combined

The similarity search and keyword search results are combined using the Reciprocal Rank Fusion (RRF) function.

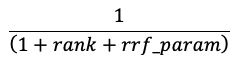

RRF rescores each document from each method using the score:

In the above equation, rank starts at 0, sums the scores for each document and returns the highest scoring documents.

rrf_param controls the relative importance of higher-ranked and lower-ranked documents. Based on the literature, rrf_param is set to 60.

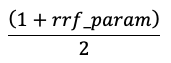

Scores are normalized so that the highest score is 1 and the lowest score is 0 using the following equation:

Options for providing vector embeddings

To create a vector database in Databricks, you must first decide how to provide vector embeddings. Databricks supports three options:

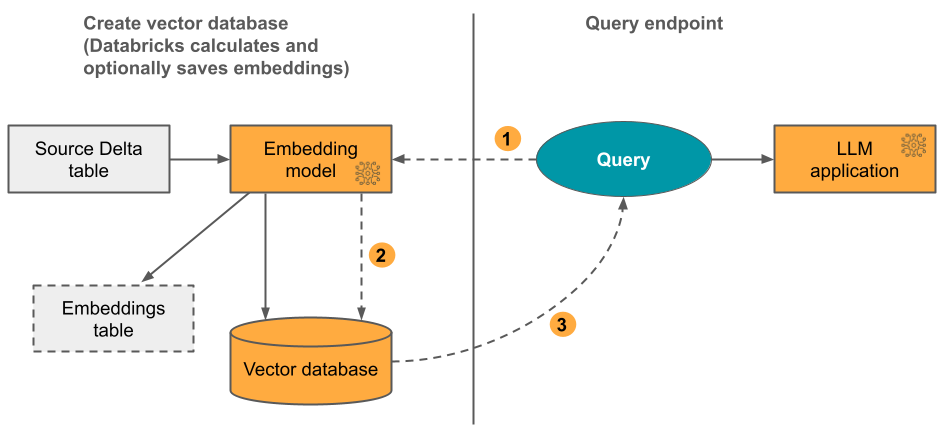

Option 1: Delta Sync Index with embeddings computed by Databricks You provide a source Delta table that contains data in text format. Databricks calculates the embeddings, using a model that you specify, and optionally saves the embeddings to a table in Unity Catalog. As the Delta table is updated, the index stays synced with the Delta table.

The following diagram illustrates the process:

Calculate query embeddings. Query can include metadata filters.

Perform similarity search to identify most relevant documents.

Return the most relevant documents and append them to the query.

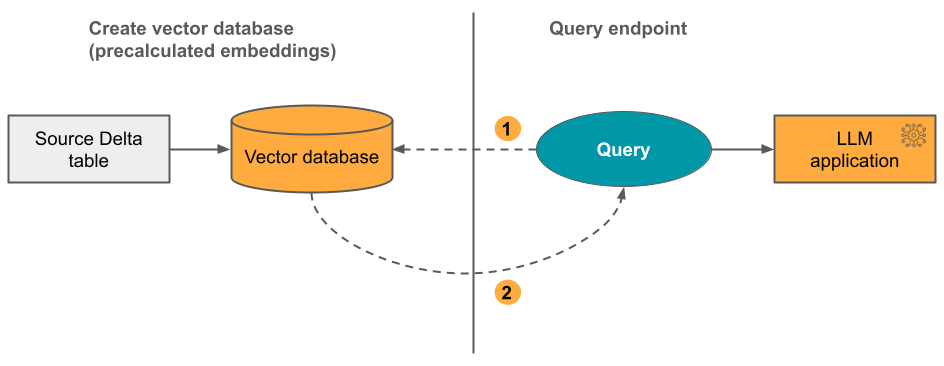

Option 2: Delta Sync Index with self-managed embeddings You provide a source Delta table that contains pre-calculated embeddings. As the Delta table is updated, the index stays synced with the Delta table.

The following diagram illustrates the process:

Query consists of embeddings and can include metadata filters.

Perform similarity search to identify most relevant documents. Return the most relevant documents and append them to the query.

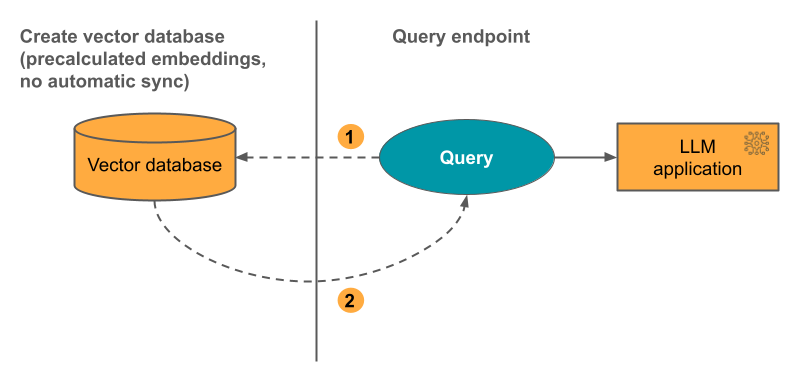

Option 3: Direct Vector Access Index You must manually update the index using the REST API when the embeddings table changes.

The following diagram illustrates the process:

How to set up Mosaic AI Vector Search

To use Mosaic AI Vector Search, you must create the following:

A vector search endpoint. This endpoint serves the vector search index. You can query and update the endpoint using the REST API or the SDK. See Create a vector search endpoint for instructions.

Endpoints scale up automatically to support the size of the index or the number of concurrent requests. Endpoints do not scale down automatically.

A vector search index. The vector search index is created from a Delta table and is optimized to provide real-time approximate nearest neighbor searches. The goal of the search is to identify documents that are similar to the query. Vector search indexes appear in and are governed by Unity Catalog. See Create a vector search index for instructions.

In addition, if you choose to have Databricks compute the embeddings, you can use a pre-configured Foundation Model APIs endpoint or create a model serving endpoint to serve the embedding model of your choice. See Pay-per-token Foundation Model APIs or Create foundation model serving endpoints for instructions.

To query the model serving endpoint, you use either the REST API or the Python SDK. Your query can define filters based on any column in the Delta table. For details, see Use filters on queries, the API reference, or the Python SDK reference.

Requirements

Unity Catalog enabled workspace.

Serverless compute enabled. For instructions, see Connect to serverless compute.

Source table must have Change Data Feed enabled. For instructions, see Use Delta Lake change data feed on Databricks.

To create a vector search index, you must have CREATE TABLE privileges on the catalog schema where the index will be created.

Permission to create and manage vector search endpoints is configured using access control lists. See Vector search endpoint ACLs.

Data protection and authentication

Databricks implements the following security controls to protect your data:

Every customer request to Mosaic AI Vector Search is logically isolated, authenticated, and authorized.

Mosaic AI Vector Search encrypts all data at rest (AES-256) and in transit (TLS 1.2+).

Mosaic AI Vector Search supports two modes of authentication:

Service principal token. An admin can generate a service principal token and pass it to the SDK or API. See use service principals. For production use cases, Databricks recommends using a service principal token.

Python# Pass in a service principal vsc = VectorSearchClient(workspace_url="...", service_principal_client_id="...", service_principal_client_secret="..." )

Personal access token. You can use a personal access token to authenticate with Mosaic AI Vector Search. See personal access authentication token. If you use the SDK in a notebook environment, the SDK automatically generates a PAT token for authentication.

Python# Pass in the PAT token client = VectorSearchClient(workspace_url="...", personal_access_token="...")

Customer Managed Keys (CMK) are supported on endpoints created on or after May 8, 2024.

Monitor usage and costs

The billable usage system table lets you monitor usage and costs associated with vector search indexes and endpoints. Here is an example query:

WITH all_vector_search_usage (

SELECT *,

CASE WHEN usage_metadata.endpoint_name IS NULL THEN 'ingest'

WHEN usage_type = "STORAGE_SPACE" THEN 'storage'

ELSE 'serving'

END as workload_type

FROM system.billing.usage

WHERE billing_origin_product = 'VECTOR_SEARCH'

),

daily_dbus AS (

SELECT workspace_id,

cloud,

usage_date,

workload_type,

usage_metadata.endpoint_name as vector_search_endpoint,

CASE WHEN workload_type = 'serving' THEN SUM(usage_quantity)

WHEN workload_type = 'ingest' THEN SUM(usage_quantity)

ELSE null

END as dbus,

CASE WHEN workload_type = 'storage' THEN SUM(usage_quantity)

ELSE null

END as dsus

FROM all_vector_search_usage

GROUP BY all

ORDER BY 1,2,3,4,5 DESC

)

SELECT * FROM daily_dbus

For details about the contents of the billing usage table, see Billable usage system table reference. Additional queries are in the following example notebook.

Resource and data size limits

The following table summarizes resource and data size limits for vector search endpoints and indexes:

Resource |

Granularity |

Limit |

|---|---|---|

Vector search endpoints |

Per workspace |

100 |

Embeddings |

Per endpoint |

320,000,000 |

Embedding dimension |

Per index |

4096 |

Indexes |

Per endpoint |

50 |

Columns |

Per index |

50 |

Columns |

Supported types: Bytes, short, integer, long, float, double, boolean, string, timestamp, date |

|

Metadata fields |

Per index |

50 |

Index name |

Per index |

128 characters |

The following limits apply to the creation and update of vector search indexes:

Resource |

Granularity |

Limit |

|---|---|---|

Row size for Delta Sync Index |

Per index |

100KB |

Embedding source column size for Delta Sync index |

Per Index |

32764 bytes |

Bulk upsert request size limit for Direct Vector index |

Per Index |

10MB |

Bulk delete request size limit for Direct Vector index |

Per Index |

10MB |

The following limits apply to the query API.

Resource |

Granularity |

Limit |

|---|---|---|

Query text length |

Per query |

32764 bytes |

Maximum number of results returned |

Per query |

10,000 |